Automated Insight Generation Using DSPy For High Quality Results

Can AI help us learn vital life lessons from years of World Happiness Report Data?

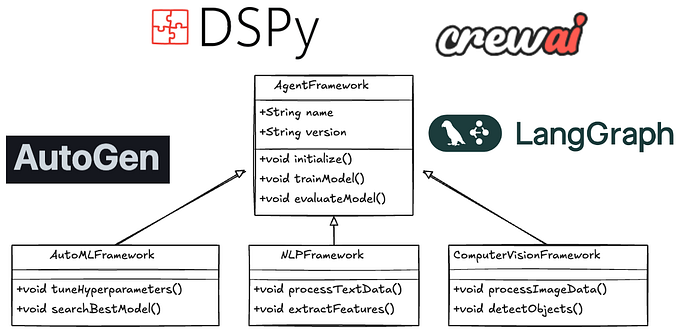

The big promise of LLMs is that they would democratize access to intelligence. However, we see over and over again that mere prompting, and in some cases even prompt augmentation via simple RAG alone, isn’t good enough to yield the results we would like these apps to provide. Yet at the same time, it is becoming increasingly clear that applying manufacturing process frameworks is helping companies think through and build AI systems that actually deliver on the promise of LLM apps to unlock and democratize intelligence at scale. This is also true in conversations that happen in executive suites or at large public AI conferences, where people like Jensen talk about enterprises attempting to build AI factories for intelligence generation.

How we go about developing these factories and the platforms on which we develop these capabilities have significant consequences for the outcome enterprises chase (across things like — Q&A Bots, Summarizers, Content Generators, Content Extractors etc.). In each of these cases, thinking about these use cases as a conglomeration of components or modules in a pipeline instead of a single LLM call is a very useful mental model. As we should expect, in cases where these AI systems work, it is not only about the models. There is much more to this like the quality of the data that is exposed to the model, tools (if using agents exposed via functions), prompting strategies, evaluations, guardrails/quality assertions and any external integrations to meaningfully deliver on these use cases. In short, thinking of this like an AI system that is akin to a manufacturing production line is an useful analogy.

This way of constructing every component/module of the AI system meticulously leads to SoTA outcomes. These systems are popularly called “Compound AI Systems”.

In the rest of this blog, my intention is to show how enterprises can think through these components and leverage their own data to build meaningful experiences using bespoke pipelines using LLMs as compute vehicles for their own use cases. Much of the content heavily leans on bringing open-source software (DSPy) and a Data Intelligence Platform (Databricks) together to show demonstrations, to elicit how we can lean into this concept of building compound AI systems while providing useful blueprints to reason about the process involved in building these systems.

Of specific interest to us right now is “System 2 thinking” or making LLMs produce better outputs based on engaging in some type of self-refinement instead of just “yapping away” in an auto regressive manner. Sure, while in an agentic pattern LLMs can reason and act via say ReAct or Self-Ask or Plan & Solve, in a systems-first approach the underlying mental models are controlled by the “maker” of the system because we care deeply about measurable outcomes (metrics to live by) that even agents are merely “managed as a part of the whole”. So, with this in mind, we can borrow the popular Six Sigma DMAIC methodology to pursue continuous improvement in these AI systems we end up deploying. Here’s a quick look of what something like this would look like in a Data Intelligence Platform (a platform where assets deployed end up creating a flywheel for improvement via automated intelligence generation).

Notice how this deliberately avoids calling out the LLMs, since they are like “Neural Kernels” that emit tokens when they get interacted with by the rest of the system. These LLMs are wrapped inside the larger component modules. As expected, these LLMs can be treated as entities that are easily replaced as state-of-the-art changes.

side note: If you’re interested in thinking about LLMs like Neural OS Kernels, it was popularized recently by Andre Karpathy’s LLMs in a few years vision talk.

Why is any of this important you ask? Because we care about the quality of the output. Of course, quality in itself is a delicate dance of balancing performance as a function of efficiency, relevancy, usefulness, cost effectiveness and various other similar things we care about as it pertains to a specific use case.

To demonstrate how high-quality AI-generated insights are possible, let’s examine an analysis of World Happiness Report data and explore how LLMs can be guided to produce insightful essays based on the findings. Why focus on the World Happiness Report? For one, yesterday was World Population Day. Personally, this meant an opportunity to engage with data that could give useful insight to everyone. Additionally, it’s intriguing to see how AI can help us condense longitudinal data about human experiences across the globe into meaningful insights. Through this analysis, we can explore several key questions:

- What factors contribute to human happiness?

- What actions should governments take to improve overall happiness?

- Are there discernible trends in global happiness?

- Can AI highlight glaring opportunities for improvement in societal well-being?

- Is it possible for AI to identify gaps we need to address to enhance life quality for people?

By leveraging AI to analyze this data and to relay this information in a consumable format, we can potentially uncover valuable insights that could inform policy decisions and improve lives on a global scale, all the while checking out the power of DSPy in generating controllable, measurable outputs. To keep things simple, we’ll home in on the United States (since that’s where I live, and I want to know what’s happening here). We have data from 2006–2023, I thought that it would be great for us to quickly check out correlation matrix across the entire span of the data versus what it has been recently, since that is what we all truly care about. The results just there are already quite interesting, even if this is just correlational evidence. For your reference, life ladder is a 0–10 scale of subjective well-being. Think of life ladder as a metric for measuring happiness or life satisfaction. Right out the gate, it already appears like factors such as access to social support (having friends and family) and autonomy (freedom to make choices) are EVEN more pronounced recently in how positively they correlate with life ladder. The converse is same with generosity, perceptions of corruption and log of gdp per capita. We all have our theories on why this could have been, but I’ll leave them aside for you to ponder about. This blog itself, is about controllable AI and not, politics. But it is clear, the pandemic has taken its toll on the communities here in the U.S.

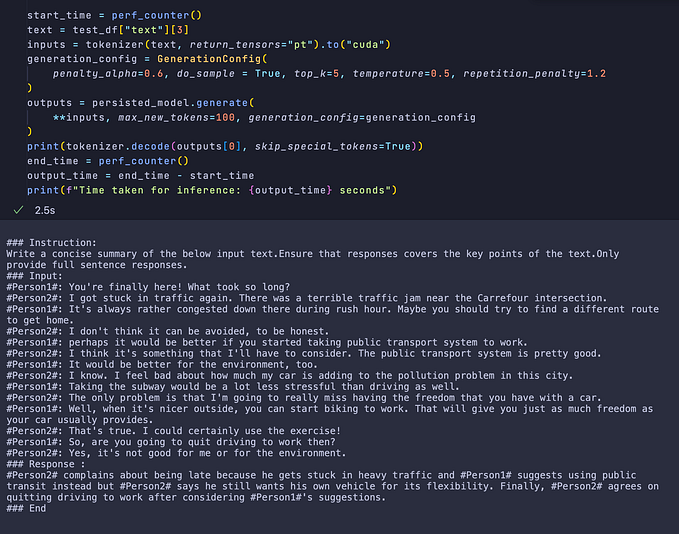

Ok, so far we haven’t even introduced DSPy. For the uninitiated, it is a prompt optimization framework that offers a nice way to algorithmically optimize language model weights and prompts. It provides ways for us to control, guide and systematically optimize language model prompts. We will not go all the way into optimizing prompts in this blog post (because I don’t have any ground truth data, nor do I think it is encompassed inside the scope of this post). But, we will certainly use dspy to show how we can “soft guide” LLMs to produce the outcomes we desire. So, how do we do this for our US life ladder data? How do we go from analyses to essays meant for policy makers? The first pass is quite simple. We can create a custom dspy.Signature that will take as inputs, a raw input table to analyze the trends in life ladder and the correlation matrix. We then use the signature to generate an essay for the said prompt (An Essay meant for policy makers) using chain of thought reasoning. Simple, quick, that’s great!

import IPython.display as display_module

class EssayOnePass(dspy.Signature):

"""Write an essay based on the question provided.

Two tables will be provided to you for context. Use these tables to answer the question.

Only use data based on the tables to validate your point of view.

This is very important so make it high quality and meet high standards."""

trends_context = dspy.InputField(desc="Use this table to understand the trends of the life ladder metric.")

correlation_context = dspy.InputField(description="Use this table to understand the correlations between influencing factors and the life ladder metric.")

question = dspy.InputField(desc = "The user question about the life ladder metric")

result = dspy.OutputField(desc="An essay based on the context provided")

write_essay = dspy.ChainOfThought(EssayOnePass)

query = """Write an essay about life ladder in the US ?

Include recommendations, insights and motivations based on data.

Extract three bullets points for trends and three for correlations.

Essay has to be insightful, coherent, and easy to interpret for policy makers"""

essay_one_pass = write_essay(trends_context = stringified_df,

correlation_context = stringified_corr_df,

question = query )

display_module.display_markdown(essay_one_pass.result, raw=True)

Ok! That’s quite good! Definitely check out the results above, pretty good I’d say. But a couple of things are going on here. Where is the LLM call you ask? dspy provides a nice interface to interact with various LLM endpoint providers. We used OpenAIgpt-4o via Databricks external model integration.

external_gpt4o = dspy.Databricks(model='openai-chat-endpoint-sg-4o',

model_type='chat',

api_key = API_KEY,

api_base = API_BASE,

max_tokens=2000,

temperature=0.7)

dspy.configure(lm=external_gpt4o)We knew that the essay was meant for policy makers, but how do we ensure that it is indeed at their level? Here’s where evaluation metrics come in. We could use simple readibility metrics.

Alternately, even better, we can test the completion against an expectation! dspy allows for this nicely, where we can ask an LLM judge to grade the completion given an expectation. See below where we define custom signatures and use them in a function leveraging the CoT Module

from pydantic import BaseModel, Field

class Input(BaseModel):

predicted_text: str = Field(description="essay input from the essay writer")

expectation: str = Field(description="expectation from the user")

class Output(BaseModel):

value: bool = Field(description="only return True/False")

reason: str = Field(description="reason for the result")

grade: float = Field(gt=0.0, lt=10.0, description="grade score for the essay against the expectation")

class FollowSuggestionCheck(dspy.Signature):

"check if the essay writer respected the expectation. pay close attention to the expectation and see if the essay is faithful to it"

input: Input = dspy.InputField()

faithfulness:Output = dspy.OutputField()

def result_check(predicted_text: str, statement: str) -> bool:

checker = dspy.TypedChainOfThought(FollowSuggestionCheck)

essay_output = Input(predicted_text=predicted_text, expectation=statement)

res = checker(input=essay_output)

print(f"The grade for the essay against the expectation --> {statement} is {res.faithfulness.grade}. \nThe reason for the grade : {res.faithfulness.reason}")

return res.faithfulness.valueThis means we can easily ask an LLM to grade against an expectation and provide the reason for the grade.

Looks like we were able to generate a decent essay. But,

- What happens when we need to do this for some other country?

- What happens when we need to do these expectation checks on the fly to guide AI based essay completions?

- What happens when we need to guide the LLM to perform self-refinement based on the judge’s feedback?

- How do we control the changes in the persona while still maintaining the rest of the requirements?

Enter dspy Assertions. With suggestions, we can encourage self-refinement through retries without enforcing hard stops. DSPy logs failures after maximum backtracking attempts and continues execution.

How do we do this? We can build on what we’ve done so far, but by adding suggestions to check and refine our completions against. And since we’re using Databricks for doing all this we can simply switch context across LLMs based on the task. For example, in the code block below, I use qwen2–72b for extracting out the country name, and I use gpt-4o for generating the essay and imposing the suggestions!

The route that I’ve taken here is pretty straightforward. But it does the following.

- Extract the country using Qwen2 based on the user query.

- Run a function to collect data from Databricks SQL and then compute correlations across all the variables

- Next, generate a candidate essay based on the prompt

- Evaluate the candidate against the expectation using

dspy.Suggest - Retry & refine the essay, if the expectation isn’t met. Repeat 4 & 5 until the expectation is met or until we reach

max_backtracking_attempts - Calculate the consensus text standard

- Return the Essay and the rationale from the chain of thought prompting

The way suggestions work is pretty straightforward. When a constraint (the expectation we set for the essay) is not met, an under-the-hood backtracking is initiated, offering the model a chance to self-refine and proceed, which is done through internally modifying your DSPy program’s signature by adding the past output and an instruction based on the user-defined feedback on what went wrong/a possible fix. When we do this on our essay writer program, we see the following things happen. This time around, to demonstrate the effectiveness of DSPy to increase the predictability and reliability of our LLM pipelines we ask the following : “Write an essay about life ladder in the US. Make it a story that 8th graders can read and make sense of”. The main thing here is that the response needs to take the form of a story while maintaining the grade level required for reading. The following image depicts what happens when we run our program.

Here’s the result that we got, thanks to the power of DSPy!

The data reinforces timeless wisdom for happiness: nurture relationships, pursue autonomy, and embrace positivity. These simple principles, though often overlooked, are the bedrock of life satisfaction in the U.S. Maybe, AI can also be used to solve these problems and increase the subjective well-being of people! As a community, we should start looking into this vital area and build AI tools that help scores of people who are experiencing negative emotions, social isolation & a clear lack of autonomy. A better tomorrow won’t build itself. We need to act and the time is now!

Ultimately, through this example, we have seen how compound AI systems can help increase the attach to AI first products and help us reliably and predictably deploy systems at scale. Often, many complex problems take some form of prompt decomposition coupled with reflection and tool calling. Of course, which specific pattern to use when depends on the use case but we can push these systems even further by optimizing the prompts and fine-tuning the weights of the model in the pipeline, so they get an ultra-nuanced understanding of the task at hand. Yet again, dspy offers options for both these things. But for that, we’ll come back again another time. Remember to embrace autonomy while staying connected & positive!